👋 Hi There, I’m Hrithick

🧑💻️ I have 3.8YOE as a Data Scientist, where I mostly worked on NLP, Vision, and Multimodal Deep Learning. My journey started with implementing object detection on drones 🛸, then developing Question Answering Systems using Knowledge Graphs 📚, and later Information Extraction from Documents leveraging Pix2Struct, Donut, and LayoutLM models 📄. Most recently, I have been building chatbots leveraging LLMs, Vector Database and Advance RAG pipeline.

🎓📃 My interests are in Large Language Models (LLMs), Multimodal Deep Learning, and Generative Adversarial Networks (GANs). 🌐

📝 I’m currently reading academic papers on LLMs. 📚 I also believe that software engineering skill is crucial, so I’m actively practicing Data Structures and Algorithms on Leetcode. 💻

💻 Work Experience

💼 OGMA IT LLC

Developed ‘The Credit Genius’, a RAG-based chatbot designed specifically for performing Question Answering tasks sourced from the client’s written material and Video Lectures. Utilized advanced techniques such as Sentence Window Retrieval and Hypothetical Questions (HyDe) within the RAG pipeline to achieve superior answer relevancy, Context Recall, and Faithfulness. Completed the project end-to-end by Dockerizing the application and deploying the system on AWS EC2 instance.

Built a Multimodal DocumentAI system for extracting information from Logistic Tickets, leading to a 91% reduction in the time and cost dedicated to manually verifying trucking tickets. Initially fine-tuned pix2struct-textcaps for information extraction in version 1, but later transitioned to Claude 3-opus.

💼 Openstream.ai

- Collaborated with the team to build and deploy question-answering systems leveraging knowledge graphs (Cypher and Neo4j) and language models models (BERT, T5, DistilBERT).

- Built a pipeline to automatically extract structured tables from documents into a knowledge graph using Table Transformer (DETR fine-tuned to detect tables) and YOLO.

💼 Kesowa [MLE Intern]

- Optimized and improved the performance metric of the tree detection model on large aerial images (>25GB), reducing training time and enhanced post-processing for shape file generation, enabling QGIS visualization.

- Programmed an automated attendance system leveraging Siamese network for face recognition/verification,specifically designed for company employees and deployed on a Jetson Nano.

📚 Research Paper Implementations

Implemented the Transformer paper titled Attention Is All You Need using TensorFlow 2.0. 📄 This project helped me gain a practical understanding of the Transformer model, GPT, and BERT. 🤖 The Transformer model was trained to perform machine translation from English to Spanish. 🇬🇧➡️🇪🇸 The code can be found here.

- Implemented Vision Transformer, the paper titled An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale, which had a significant impact on vision models by leveraging transformer models for image classification, achieving state-of-the-art results on ImageNet. The code, implemented by me, provides an easy way to load and fine-tune Vision Transformer models. The code can be found here.

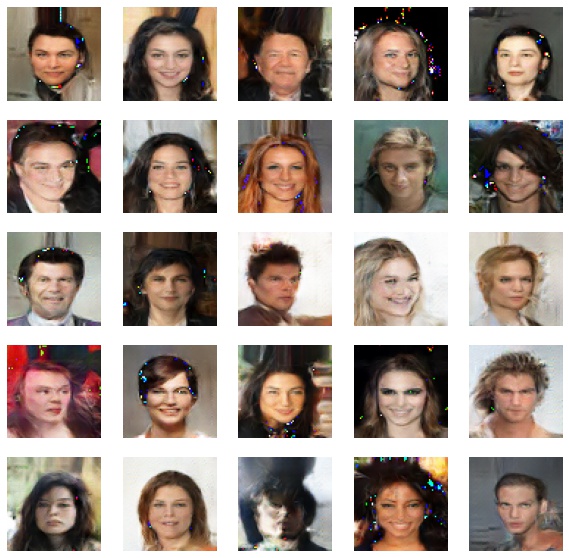

# Load any vit model from vit import viT vit_large = viT(vit_size="ViT-LARGE32") vit_large.from_pretrained(pretrained_top=True) - Reproduced the inference of the paper titled Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks also known as DCGAN, to generate faces. The model was implemented from scratch using TensorFlow 1.x (tf.compat.v1). It was trained for 150 epochs, after which it was able to generate decent fake face images. The code can be found here.

| |